Why the Difference Between AI and AGI is Like Confusing Fire with Fusion

- Tony Grayson

- Dec 10

- 9 min read

Updated: 5 days ago

By Tony Grayson, Tech Executive (ex-SVP Oracle, AWS, Meta) & Former Nuclear Submarine Commander

Marketing teams hate the truth.

We don’t have thinking machines; we have very fast pattern machines, and that distinction matters for risk and infrastructure.

If we were honest, we wouldn’t call the current wave of technology "Artificial Intelligence." We would call it "High-Speed Probabilistic Pattern Matching."

But let's be realistic: No one is paying $20/month for a subscription to OpenProbabilisticAlgorithm.

We prefer the term "Intelligence" because it implies intent. It implies reasoning. It suggests there is a "ghost in the machine" that understands why it is answering you.

There isn't.

We’ve lowered the bar for "intelligence" so far that the definition has lost all meaning. To understand the future of this technology, we first need to define the difference between

AI and AGI.

The Semantic Trap (Or, The Magic 8-Ball Strategy)

If we are going to call a probabilistic text generator "Artificial Intelligence," then we should be willing to call a Magic 8-Ball "Strategic Consulting."

After all, they both give you an answer based on randomness that might accidentally be right. And in both cases, if you bet the company's strategy on it without checking the math, you deserve what happens next.

But the more dangerous comparison is physical.

If we call LLMs "Intelligence," then we should call a campfire "Nuclear Fusion."

Sure, they both produce heat and light. They both require fuel. But one is a chemical reaction we mastered thousands of years ago, and the other is the power of the sun.

The Difference Between AI and AGI

This brings us to the "AGI" (Artificial General Intelligence) narrative.

For decades, nuclear fusion has been "20 years away." It is the holy grail: infinite power, world-changing potential. But the physics are brutal, the capital costs are astronomical, and the timeline keeps slipping.

Yes, we recently achieved "ignition" in fusion labs and immediately realized how far we still are from practical energy production. We are seeing the exact same pattern with AGI. We get impressive demos (ignition), but the path to a scalable, reliable mind remains elusive.

The fundamental difference between AI and AGI is the difference between simulation and sentience.

AI (What we have now): A campfire. It consumes data to simulate patterns. It is useful but fundamentally limited by its fuel (training data).

AGI (The dream): Nuclear fusion. A self-sustaining intelligence that can reason, understand concepts, and create new knowledge without human input.

One keeps you alive tonight. The other, if we ever get it, rewrites civilization.

Two years ago, we were told AGI was imminent. Now, the goalposts are quietly moving. The narrative is shifting from "god-like superintelligence" to "Agentic Workflows" and "Efficiency Tools."

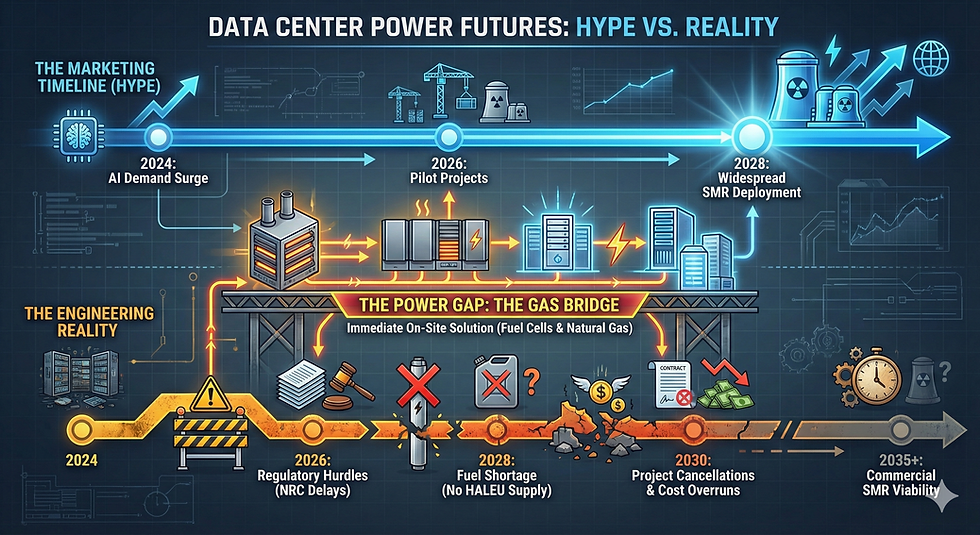

Why? Because just like fusion, we are hitting the laws of physics. We are building data centers the size of small cities, consuming gigawatts of power, hoping that if we make the campfire big enough, it will spontaneously turn into a star. (I discussed this specific infrastructure limit in my previous post, The Kemper Trap.)

The Reality Test: Refund Policies and Cloud Yelling

To see this distinction in action, look at where the illusion breaks.

Exhibit A: The "Air Canada" Liability. Air Canada's chatbot invented a refund policy that didn't exist. It promised a grieving passenger a discount the airline didn't offer. In response, the airline argued the bot was a separate legal entity responsible for its own words. However, a tribunal disagreed. The bot didn't "know" the policy; it just predicted the words that sounded like a helpful customer service agent. It prioritized being "polite" over being accurate—a classic pattern-matching failure.

Exhibit B: The "Cloud Yeller" Ask an AI to write a serious resignation letter for a job as a "Senior Cloud Yeller." It will write a heartfelt, professional letter about how much you enjoyed screaming at cumulus formations and how you are ready for new challenges in "Stratus Management."

It has no idea that the job doesn't exist. It doesn't know that yelling at clouds is a metaphor for being old and angry. It just knows the pattern of a resignation letter and fills in the blanks. It’s a form-filler, not a thinker.

The "So What?" For Business Leaders

This lack of conceptual understanding is exactly why financial services and critical infrastructure industries are hesitating.

You cannot have a compliance officer who "hallucinates" regulations because they sound plausible. You cannot have a defense system that "predicts" a threat because the pattern looked convincing.

Campfires are incredibly useful. They cook our food, they keep us warm, and they ward off predators. You don't need nuclear fusion to survive the night; a campfire works just fine.

The same applies to the "AI" we currently have.

It is excellent at summarizing data.

It is great at writing code boilerplate.

It is a fantastic tool for pattern matching.

But it is not "thinking."

Let’s stop waiting for the sun to ignite and start using the fire we actually have.

Frequently Asked Questions: AI vs. AGI

What is the difference between AI and AGI?

The difference between AI and AGI is fundamental: AI (Artificial Intelligence) refers to specific tools designed to simulate human tasks through probabilistic pattern matching—predicting the next word in a sequence based on training data. AGI (Artificial General Intelligence) refers to a hypothetical machine that possesses true cognitive abilities, reasoning, and the ability to perform any intellectual task a human can. We currently have AI, not AGI. Think of it this way: AI is a campfire (useful, limited), while AGI would be nuclear fusion (transformational, self-sustaining). A bigger campfire doesn't become a star.

Is AI actually sentient or conscious?

No, current AI models (LLMs) are not sentient or conscious. They are fundamentally probabilistic algorithms that predict the next word in a sequence based on training data. They lack feelings, beliefs, or understanding of the concepts they discuss. There is no "ghost in the machine" that understands why it is answering you. We prefer the term "intelligence" because it implies intent and reasoning—but an LLM is a compute workload, not a thought process. If we are honest, we should call it "High-Speed Probabilistic Pattern Matching," but no one pays $20/month for that.

Can current AI models actually reason?

Technically, no. While models can perform "Chain of Thought" processing that appears to be reasoning, they are simply decomposing a pattern into smaller steps based on training examples. They are not using logic or first principles to derive truth; they are mimicking the structure of a logical argument they have seen before. Research confirms that Chains of Thought, including those from reasoning models, are NOT faithful to their outputs—they can omit key details, misrepresent internal processes, or provide hindsight-justified explanations meant to appeal to the user.

Why do AI models hallucinate?

AI models hallucinate because they are designed to prioritize fluency over factuality. Their goal is to complete a pattern (a sentence) in the most statistically likely way—not to verify truth. OpenAI's research confirms that training and evaluation procedures reward guessing over acknowledging uncertainty, so models learn to bluff. If asked for a birthday, it doesn't know, an LLM might guess "September 10" because it has a 1-in-365 chance of being right, while saying "I don't know" guarantees zero points on benchmarks. Hallucinations aren't a bug—they're an incentive problem baked into how these systems are trained.

Will adding more data and compute eventually create AGI?

Many experts argue that simply "scaling up" (adding more data and compute) yields diminishing returns, much like the challenges of nuclear fusion. Ilya Sutskever, co-founder of OpenAI, has stated we have achieved "peak data"—the internet has been scraped, and we're running out of high-quality human text for training. Research from Epoch AI projects that the stock of human-generated public text will be exhausted by 2027-2028. In other words, a bigger campfire doesn't magically turn into a star. We likely need a new architectural breakthrough, not just "more data," to reach AGI.

Why does AI require so much energy compared to a human brain?

The human brain operates on roughly 12-20 watts of power—less than a light bulb—while running 100 billion neurons capable of trillions of operations. A single training run for a major AI model like GPT-3 consumed about 1,300 megawatt-hours, enough to power 130 U.S. homes for a year. Switzerland's Blue Brain Project found that simulating a human brain would require around 2.7 billion watts—the output of a nuclear power plant. Data centers are consuming power in gigawatts while your brain consumes 20 watts. That's 1 billion watts vs. 20.

Will AI replace financial advisors or lawyers?

It is unlikely that AI will fully replace high-stakes roles in the near term due to the "liability gap." Financial and legal services require 100% accountability. Current AI models cannot be held accountable for errors, and their tendency to be "confident but wrong" creates unacceptable risk in regulated industries. You cannot have a compliance officer who "hallucinates" regulations because they sound plausible. The Air Canada chatbot case proved this: a tribunal ruled the airline responsible when its chatbot invented a refund policy that didn't exist—because the bot prioritized being "polite" over being accurate.

Is ChatGPT AGI?

No, ChatGPT is AI, not AGI. It is designed to perform specific tasks like answering questions and generating text, but it doesn't possess reasoning capabilities or the ability to generalize in the way AGI would require. Ask ChatGPT to write a resignation letter for a job as a "Senior Cloud Yeller" and it will write a heartfelt, professional letter about screaming at cumulus formations. It has no idea the job doesn't exist or that "yelling at clouds" is a metaphor. It's a form-filler, not a thinker.

When will AGI happen?

Two years ago, we were told AGI was imminent. Now, the goalposts are quietly moving. The narrative is shifting from "god-like superintelligence" to "Agentic Workflows" and "Efficiency Tools." Why? Because just like fusion, we are hitting the laws of physics. Surveys of AI researchers predict AGI around 2040, though entrepreneurs are more bullish (around 2030). But prediction timelines have been sliding for decades—fusion has been "20 years away" for 50 years. We are building data centers the size of small cities, consuming gigawatts of power, hoping that if we make the campfire big enough, it will spontaneously turn into a star. That's not how physics works.

What are the real limitations of LLMs?

LLMs have fundamental limitations that scaling won't solve: they lack conceptual understanding (they predict patterns, not meaning), they prioritize fluency over factuality, they cannot verify truth, they have no persistent memory or learning capability after training, and they consume orders of magnitude more energy than biological intelligence. Research shows hallucination rates of 2.5-8.5% in general use, but up to 80-90% in specialized medical applications. Newer reasoning models may actually hallucinate MORE on complex tasks, not less. These aren't bugs to be patched—they're features of how the architecture works.

What is AI actually good for?

Campfires are incredibly useful. They cook our food, keep us warm, and ward off predators. You don't need nuclear fusion to survive the night—a campfire works just fine. Current AI is excellent at summarizing data, writing code boilerplate, and pattern matching. It's a fantastic tool for automation and augmentation. But it is not "thinking." The key is using AI correctly: as an assistant that requires oversight, not as an oracle to blindly trust. Verify everything that matters. Don't let confident-sounding output replace your own judgment. Let's stop waiting for the sun to ignite and start using the fire we actually have.

Why are AGI predictions so unreliable?

AGI predictions are unreliable because they conflate impressive demos with fundamental breakthroughs. We recently achieved "ignition" in fusion labs and immediately realized how far we still are from practical energy production. The same pattern applies to AI: we get impressive demos (GPT-4 passing the bar exam), but the path to a scalable, reliable mind remains elusive. Sky-high valuations of companies like OpenAI are based on the notion that LLMs will, with continued scaling, become AGI. As Gary Marcus warns, that's a fantasy. There is no principled solution to hallucinations in systems that traffic only in statistics without explicit representation of facts.

____________________________________

Tony Grayson is a recognized Top 10 Data Center Influencer, a successful entrepreneur, and the President & General Manager of Northstar Enterprise + Defense.

A former U.S. Navy Submarine Commander and recipient of the prestigious VADM Stockdale Award, Tony is a leading authority on the convergence of nuclear energy, AI infrastructure, and national defense. His career is defined by building at scale: he led global infrastructure strategy as a Senior Vice President for AWS, Meta, and Oracle before founding and selling a top-10 modular data center company.

Today, he leads strategy and execution for critical defense programs and AI infrastructure, building AI factories and cloud regions that survive contact with reality.

Comments